Evaluation Sovereignty

2025-05-08

Summary

As AI systems scale in capability and risk, evaluation protocols—not just models—are emerging as sovereign assets. This piece outlines a framework for cryptographic disclosure, mineral-aware export governance, and secure evaluation sharing in a post-classical technological regime.

Evaluation Sovereignty: Securing AI through Cryptographic Disclosure and Mineral-Aware Governance

In the emerging geopolitics of artificial intelligence, the most overlooked vulnerability is not the AI model itself—but its evaluation. As AI systems develop national security relevance, evaluation protocols—benchmarks, risk assessments, performance disclosures—are becoming high-value artifacts. These protocols expose model capabilities, failure modes, and potential misuse vectors, and as such, their dissemination must be governed with the same precision as hardware and software exports.

This piece summarizes the core arguments and proposals in Evals as National Security: Systemic Pathways for Disclosure, a technical brief that offers a multi-layered approach to reconciling innovation with control in the AI security landscape.

From Model-Centric to Evaluation-Centric Risk

Contemporary governance frameworks tend to fixate on model access, parameter counts, or chip thresholds (e.g., FLOPS ceilings). However, this lens is insufficient. Evaluations—particularly red-teaming procedures, CBRN capability assessments, or memory fidelity tests—can surface capabilities latent even to developers. In this context, the evaluation is not a derivative artifact, but a primary security object.

Large Language Models (LLMs), when evaluated across information-retrieval, code synthesis, and reasoning tasks, exhibit emergent properties that can be exploited for signal intelligence, software reconnaissance, and automated deception. Recent case studies demonstrate that minimal technical sophistication is now required to leverage LLMs for adversarial operations—lowering the barrier to entry for state and non-state actors alike.

Policy Proposal I: Cryptographic Disclosure Pathways

To secure evaluation sharing without stifling collaboration, we propose integrating verifiable cryptographic primitives into evaluation dissemination protocols:

- Verifiable Delay Functions (VDFs): Ensure that a model’s performance on reasoning tasks is temporally gated and cannot be pre-computed or manipulated.

- Proofs of Replication (PoRs): Allow provers to demonstrate that models were trained on authorized datasets, without revealing proprietary data.

- Commit-and-Reveal Protocols: Prevent post-hoc model tampering by requiring pre-evaluation commitment hashes.

These tools are foundational to building trust in multilateral evaluation benchmarks—akin to trusted hardware modules in traditional cryptography.

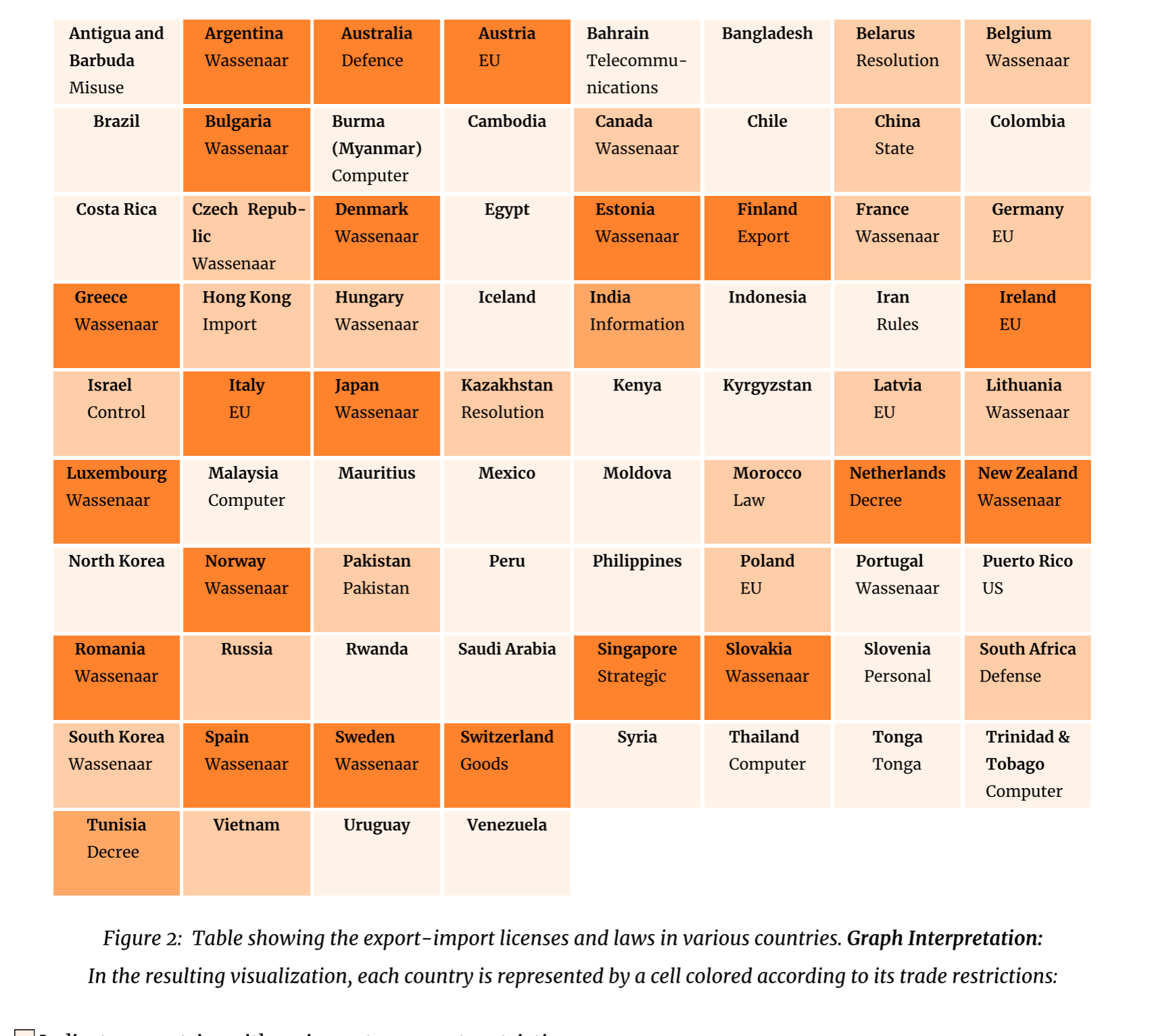

Policy Proposal II: Minerals-First Export Control

Regulatory frameworks must move beyond compute-based thresholds toward material-based criteria, particularly in the transition from classical to mesoscopic computing regimes. Semiconductor innovation is increasingly shaped by exotic substrates such as indium arsenide-aluminum (InAs–Al), which enable topological quantum computation through Majorana modes.

We propose a “minerals-first” compliance workflow, where:

- Export licensing is contingent on quantum coherence parameters (QCPs) of chip materials.

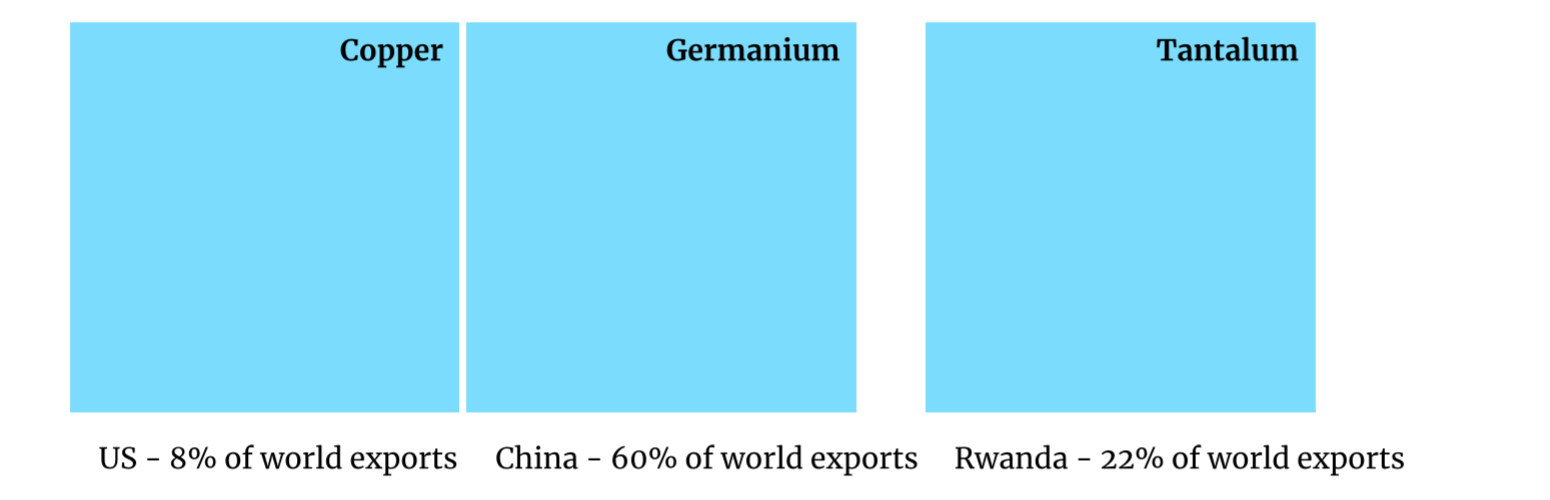

- Critical minerals such as germanium, gallium, and InAs–Al are tracked for AI hardware fabrication.

- End-use verification integrates both model performance and material provenance.

Drawing inspiration from Japan’s defense transfer model, we advocate for a system of computational disclosure linked to mineral flows, creating a traceable and enforceable control layer.

Policy Proposal III: Surprisal as Evaluation Signal

Building on information theory, we introduce the concept of evaluation surprisal—a formal measure of the divergence between expected and observed model behavior. High-surprisal results (e.g., unexpected gains in chemical synthesis or strategic reasoning) signal the need for heightened disclosure controls and may trigger provisional export restrictions.

This framework enables regulators to move from input-based assessments (model size, training data) to output-contingent governance, more accurately reflecting the real-world risk surface of advanced AI systems.

Toward Evaluation Diplomacy

What arms control treaties were to nuclear capability, evaluation protocols will become to frontier AI. They must be treated as sovereign tools—negotiated, secured, and, when shared, cryptographically verifiable. The challenge is not only technical but institutional: who has the authority to certify model claims, and under what disclosure terms?

This work argues for a formalized infrastructure of evaluation diplomacy—backed by multilateral benchmarks, interoperable cryptographic standards, and mineral-export coordination. Without this, we risk entering a regime where evaluation itself becomes a contested intelligence vector.

For full context, references, and technical definitions—including formal constructions of VDFs and case analyses of mesoscopic subterfuge—please contact me directly.

Jonas Kgomo is a researcher focused on the intersection of frontier AI, critical materials, and security frameworks for digital infrastructure.