Mutually Assured Evaluations

2025-03-02

Summary

Mutually Assured Evaluations (MAE) are cooperative systems designed to align global AI development with national security goals by incentivizing transparent disclosure of AI system capabilities. These structures act as trust mechanisms, enabling countries and developers to exchange evaluations on performance, risk, and capabilities. While MAE provides strong oversight and reduces the need for coercive export controls, its implementation may be challenged by inconsistent global participation and information asymmetry.

Mutually Assured Evaluations: Trust as Infrastructure in the AI Age

The global race for artificial intelligence (AI) capability is not just a matter of innovation—it's increasingly a question of security, coordination, and trust. As powerful AI systems diffuse across jurisdictions, the traditional tools of geopolitical governance—export controls, embargoes, and licensing regimes—are proving insufficient.

To address this, the Evals As National Security policy brief proposes a novel framework: Mutually Assured Evaluations (MAE). Like the arms control mechanisms of the nuclear era, MAE offers a systemic way to manage risk—through transparency, cooperation, and shared metrics.

What Are Mutually Assured Evaluations?

MAE are structured protocols that incentivize the open disclosure of AI capabilities, behaviors, and risks among nations, labs, and regulators. By creating a shared technical language for evaluating systems, MAE aligns diverse actors around verifiable safety norms without relying solely on coercive enforcement.

It builds on three evaluation modalities:

- Performance (task-specific outcomes)

- Capabilities (inferred generalization and potential)

- Human-risk effects (social persuasion, bias, misuse)

These evaluations are shared through secure info-channels, audited for integrity, and used to inform decisions ranging from model deployment to export permissions.

Why This Matters

Opaque evaluation practices today allow actors to understate capabilities, obscure risks, or bypass export thresholds. This enables not just accidents—but geopolitical miscalculations.

MAE proposes a shift:

- From competition over secrecy to cooperation through evaluation

- From unilateral restrictions to mutual verification

- From punitive export controls to preemptive transparency

This shift helps nations ensure that emerging AI systems are secure by design, reducing the likelihood of adversarial development, espionage, or unintentional harm.

Incentives: Aligning Evaluation With Security

In MAE, trust becomes a tradable asset. Actors who participate in good-faith disclosures and adhere to agreed-upon safety norms gain:

- Access to collaborative testbeds (like Elo-based evaluations)

- Favorable regulatory treatment or compute access

- Enhanced geopolitical standing as “trusted” developers

MAE doesn’t replace export controls—but it augments them with visibility. For example, if one country demonstrates that its frontier model complies with MAE standards, others may ease restrictions or form cooperative development pacts.

The Tools: Registries, Protocols, and Shared Standards

The proposal doesn’t stop at theory. It includes:

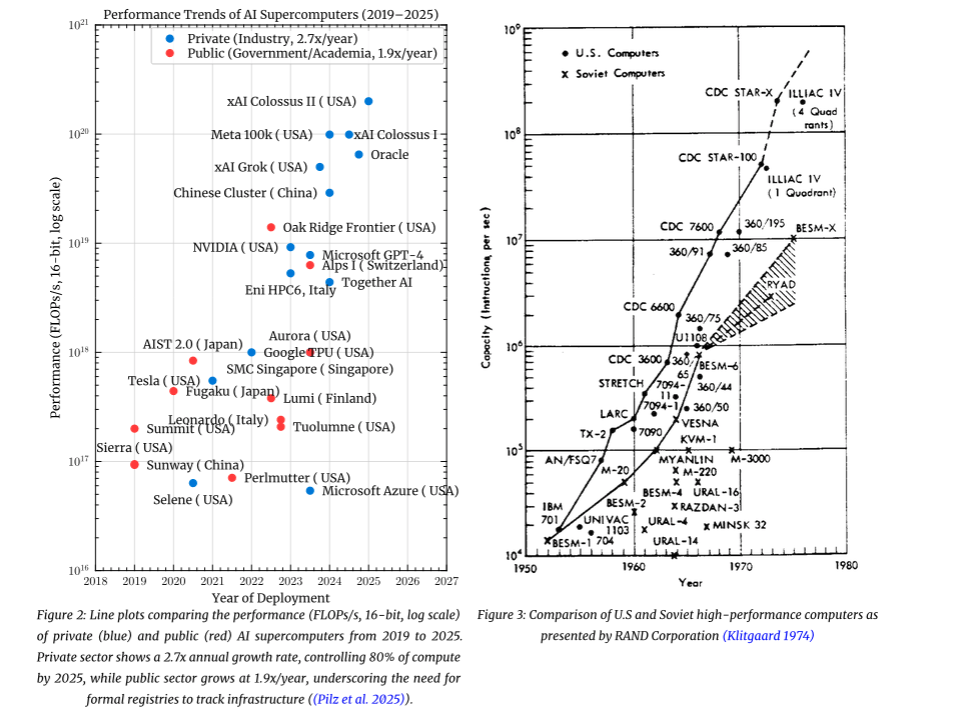

- Formal registries to track chip origin, compute thresholds, and diffusion patterns

- Evaluation Responsible Disclosure Policies (REDP) to manage sensitive information

- Verification protocols to confirm claims about model safety, robustness, and intent

These systems are interoperable with existing export regimes (e.g., Wassenaar Arrangement, BIS controls) but fill in the critical gap of functional transparency.

Challenges and Limitations

Despite its promise, MAE faces real implementation barriers:

- Asymmetric capabilities: Some nations may lack the compute or evaluative infrastructure to participate meaningfully

- Inconsistent norms: Disagreements over what counts as “risky” or “safe” could erode shared standards

- Information leakage concerns: Private actors may resist disclosing capabilities that expose IP or confer strategic disadvantage

Still, the alternative—fragmented regulation, secretive escalation, and governance by accident—is far riskier.

Conclusion

MAE stands in stark contrast to what the paper terms Mutual Assured AI Malfunction—a world where distrust, disinformation, and adversarial secrecy dominate the AI space. In MAE, transparency is deterrence, and shared evaluation is defense.

By building trust into the infrastructure of AI development, Mutually Assured Evaluations offer a path toward a world where innovation and security reinforce each other—not tear each other apart.